On Wednesday 7 November

2012 GNS Science won the “Open Science” category at the New Zealand Open Source Awards 2012 (we also won the “Government” category for GeoNet Rapid, see

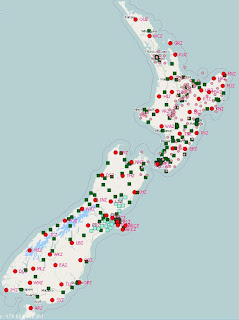

Figure 1).

From Wikipedia:

“Open science is the

umbrella term of the movement to make scientific research, data and

dissemination accessible to all levels of an inquiring society, amateur or

professional. It encompasses practices such as publishing open research, campaigning for open access, encouraging scientists to practice open

notebook science, and generally

making it easier to publish and communicate scientific knowledge.”

For GeoNet, Open Science is all about our Open Data policy, which was a founding principle of GeoNet and a very important factor in our success. This has allowed the rapid uptake of data and for third party websites to use GeoNet information in new and novel ways including websites with a regional focus (such as Canterbury Quake Live which started operation following the beginning of the Canterbury earthquake sequence in 2010).

Many New

Zealander’s reading this will remember the “user pays” phase of our development

starting in the late 1970s, accelerating through the1980s and 1990s, and

continuing into the 2000s. During this period it was government policy that all

data and information had an immediate intrinsic value and this must be paid by

the “end user”. The result of this was the drastic drop in the use of many data

sources, and the trend for policy and decision making to become “data free zones”.

When GeoNet began operation in 2001, the concept of Open Data was very

unusual in New Zealand. Therefore, the fact that it was included as a

requirement in the contract between the Earthquake Commission (EQC) and GNS Science was

revolutionary, and one of several ground-breaking features of the arrangements

between the two organisations. EQC insisting on an Open Data policy is yet another demonstration of how visionary and forward thinking the management and Board of EQC were at the time (and continue to be) with their support of GeoNet and its part in New Zealand's geological hazards mitigation strategy. What if the Canterbury earthquakes had occurred before the establishment of GeoNet when there was only one real-time seismic sensor in the whole of Canterbury?

There has been a huge change in the last decade, and now most institutions in New Zealand (and internationally) accept the value proposition that Open Data is important for the advancement of science and the overall goals of New Zealand society (and GeoNet now has over 600 sensor network sites). We live in a beautiful but geologically active land. In our naturally active environment, the GeoNet Open Data policy has quickly led to a better understanding of the perils we face and the mitigation measures required.

For example, following the destructive Christchurch Earthquake of 22 February 2011 the openly available GeoNet strong ground shaking data was crucial to the understanding of the levels of damage and what changes were needed in the building codes before reconstruction began.The observed level of damage was greater than expected from a moderate sized earthquake, but the data were available to demonstrate the very high energy (maximum shaking levels over twice the force of gravity) compared to the magnitude. Detailed earthquake source modelling was possible showing that the fault ruptured up to a shallow depth beneath the Christchurch central business district. GeoNet data are central to the publication of four Canterbury special issues of scientific journals, and features in numerous scientific papers and presentations at conferences, enriching our understanding of this important earthquake sequence.

So Open Data it is, and we are pleased that after 12 years the significance of the GeoNet Open Data policy is publically recognised - thanks to the New Zealand Open Source Awards!

So Open Data it is, and we are pleased that after 12 years the significance of the GeoNet Open Data policy is publically recognised - thanks to the New Zealand Open Source Awards!

.png)